Fostering Explainability for Generative AI Systems

Explainability is a major consideration in the trustworthiness of any AI system. Increasingly, it is also a legal requirement. Yet, it is notoriously difficult to understand and explain the workings of generative AI systems.

Organizations that are at the cutting edge of responsible AI adoption are increasingly expending significant resources on AI explainability. JP Morgan has created and resourced an Explainable AI Center of Excellence, which informs its efforts in other areas, such as fairness, accountability and compliance. DARPA, the R&D agency of the US Department of Defense, has led major efforts to advance AI explainability in areas like medicine and transportation.

So, what is AI explainability, and how should organizations approach it for generative AI systems?

What is Explainability?

The NIST AI Risk Management Framework describes explainability as "a representation of the mechanisms underlying AI systems’ operation." So, the key question in AI explainability is: "Why did the AI system provide a certain output?" More specifically, why did the combination of models and data provide a certain output?

There are many potential audiences for AI explainability. These include the AI system’s developers, deploying organizations, human operators, sellers/buyers, compliance teams, regulators, affected end-users and the public. Each of these audiences typically prefers communications at different levels of detail.

Underlying these explanations is something more technical – interpretability. If explainability is the “why,” interpretability is the “how.”

Bank Loan Example

Take the example of a bank using an AI system to approve or deny loan applications. In the US, when denying a loan, a bank must provide the applicant with an explanation of why the decision was made. The purpose of this explanation is to demonstrate that accurate information was used in a way that conforms with the bank’s decision-making processes. It also gives the applicant an opportunity to correct any inaccurate information or to dispute the rigor of the process.

A loan applicant likely prefers clearly communicated information, like “Your credit score was 20 points short of the automatic approval threshold and additionally, the assets you provided were $8,000 short of securing the loan outright.”

Yet, to be able to provide this kind of clear statement, the bank’s data and AI team needs to understand how the data inputs and the models constituting the AI system result in the outputs (AI interpretability). This, in turn, requires an understanding of how the models and datasets were developed and what kinds of governance they were subjected to throughout their lifecycles.

Explainability for Generative AI

Since generative AI systems are based on massive neural networks that train and process vast amounts of data, their workings are difficult to understand and explain. Even the developers of the most powerful generative AI systems have trouble explaining why their systems generate specific inaccurate outputs when prompted in certain ways.

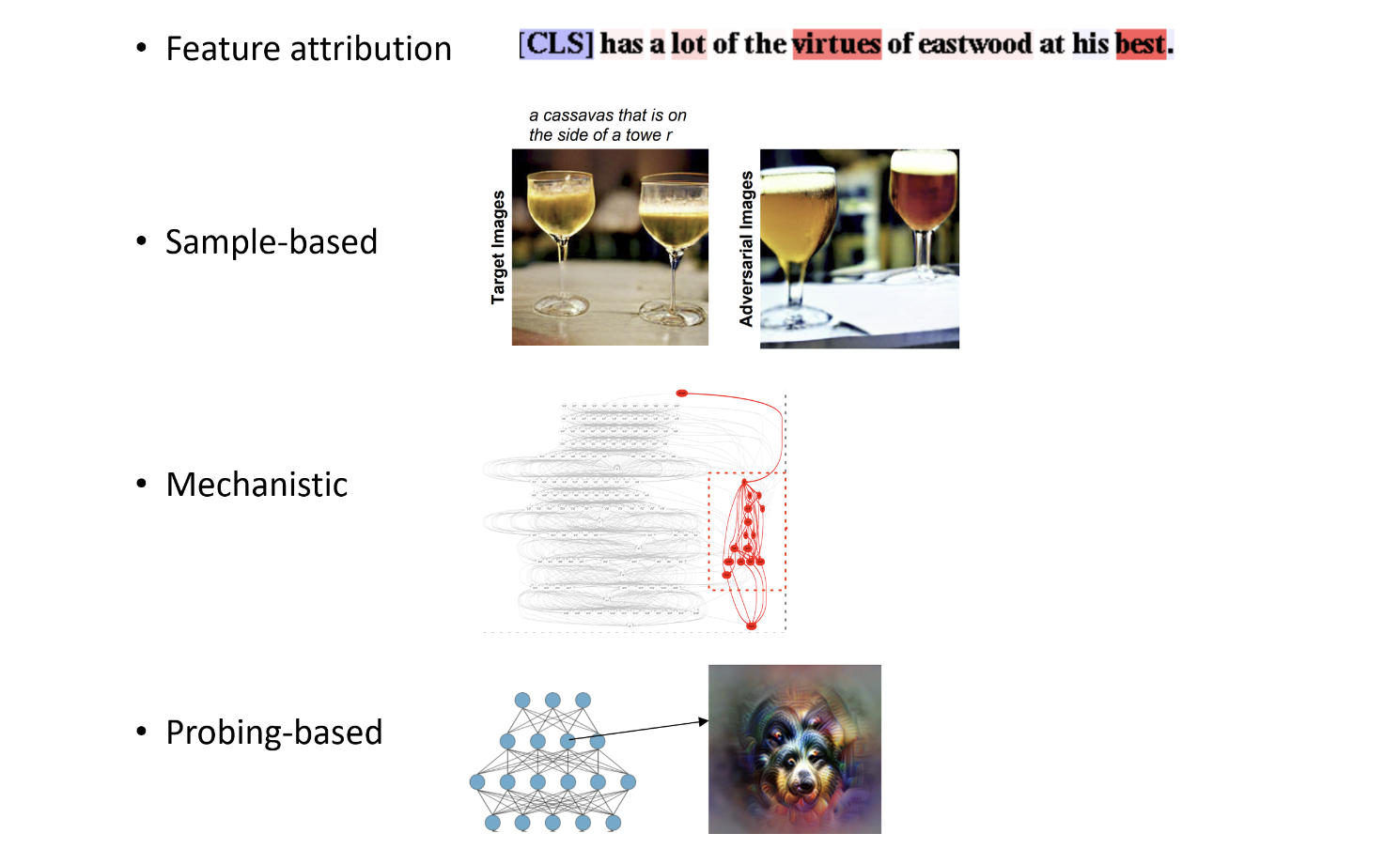

Researchers have adopted several different approaches to this problem, and scholars have surveyed these approaches. For example, Johannes Schneider from the University of Liechtenstein has developed a taxonomy of research approaches to explainability for generative AI systems.

What does this mean for practitioners?

Leaders within organizations best understand their use cases, the significance of their impacts and their regulatory requirements. These considerations should inform the objectives of their generative AI explainability efforts. The standards will be higher when the stakes are higher – such as in certain medical and financial use cases.

Collaborating across functions to maintain and develop high-quality documentation throughout each AI system’s lifecycle is a prerequisite for AI explainability efforts. Additionally, it is important to create the capability to monitor and adopt the generative AI explainability methods relevant to the organization’s common AI use cases.

Enzai is here to help

Enzai’s AI GRC platform can help your company deploy AI in accordance with best practices and emerging regulations, standards and frameworks, such as EU AI Act, the Colorado AI Act, the NIST AI RMF and ISO/IEC 42001. To learn more, get in touch here.

Build and deploy AI with confidence

Enzai's AI governance platform allows you to build and deploy AI with confidence.

Contact us to begin your AI governance journey.